library(tidyverse)11 Residuals

When looking at a linear model of your data, there’s a measure you need to be aware of called residuals. The residual is the distance between what the model predicted and what the real outcome is. Take our model at the end of the correlation and regression chapter. Our model predicted Maryland’s women soccer should have outscored George Mason by a goal a year ago. The match was a 3-2 loss. So our residual is -2.

Residuals can tell you several things, but most important is if a linear model the right model for your data. If the residuals appear to be random, then a linear model is appropriate. If they have a pattern, it means something else is going on in your data and a linear model isn’t appropriate.

Residuals can also tell you who is under-performing and over-performing the model. And the more robust the model – the better your r-squared value is – the more meaningful that label of under or over-performing is.

Let’s go back to our model for men’s college basketball. For our predictor, let’s use Net FG Percentage - the difference between the two teams’ shooting success.

For this walkthrough:

Then load the tidyverse.

logs <- read_csv("data/cbblogs1525.csv")Rows: 110070 Columns: 60

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (10): Season, TeamFull, Opponent, HomeAway, W_L, URL, Conference, Team,...

dbl (49): Game, TeamScore, OpponentScore, TeamFG, TeamFGA, TeamFGPCT, Team3...

date (1): Date

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.First, let’s make the columns we’ll need.

residualmodel <- logs |> mutate(differential = TeamScore - OpponentScore, FGPctMargin = TeamFGPCT - OpponentFGPCT)Now let’s create our model.

fit <- lm(differential ~ FGPctMargin, data = residualmodel)

summary(fit)

Call:

lm(formula = differential ~ FGPctMargin, data = residualmodel)

Residuals:

Min 1Q Median 3Q Max

-51.053 -6.278 -0.221 5.928 68.482

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.55356 0.02885 19.19 <2e-16 ***

FGPctMargin 123.43359 0.25881 476.93 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 9.556 on 110063 degrees of freedom

(5 observations deleted due to missingness)

Multiple R-squared: 0.6739, Adjusted R-squared: 0.6739

F-statistic: 2.275e+05 on 1 and 110063 DF, p-value: < 2.2e-16We’ve seen this output before, but let’s review because if you are using scatterplots to make a point, you should do this. First, note the Min and Max residual at the top. A team has under-performed the model by 51 points (!), and a team has overperformed it by 68 points (!!). The median residual, where half are above and half are below, is just slightly below the fit line. Close here is good.

Next: Look at the Adjusted R-squared value. What that says is that 67 percent of a team’s scoring differential can be predicted by their FG percentage margin.

Last: Look at the p-value. We are looking for a p-value smaller than .05. At .05, we can say that our correlation didn’t happen at random. And, in this case, it REALLY didn’t happen at random. But if you know a little bit about basketball, it doesn’t surprise you that the more you shoot better than your opponent, the more you win by. It’s an intuitive result.

What we want to do now is look at those residuals. We want to add them to our individual game records. We can do that by creating two new fields – predicted and residuals – to our dataframe like this:

residualmodel <- residualmodel |> mutate(predicted = predict(fit), residuals = residuals(fit))Error in `mutate()`:

ℹ In argument: `predicted = predict(fit)`.

Caused by error:

! `predicted` must be size 110070 or 1, not 110065.Uh, oh. What’s going on here? When you get a message like this, where R is complaining about the size of the data, it most likely means that your model is using some columns that have NA values. In this case, the number of columns looks small - perhaps 3 - so let’s just get rid of those rows by using the calculated columns from our model:

residualmodel <- residualmodel |> filter(!is.na(FGPctMargin))Now we can try re-running the code to add the predicted and residuals columns:

residualmodel <- residualmodel |> mutate(predicted = predict(fit), residuals = residuals(fit))Now we can sort our data by those residuals. Sorting in descending order gives us the games where teams overperformed the model. To make it easier to read, I’m going to use select to give us just the columns we need to see and limit our results to games involving Maryland.

residualmodel |> filter(Team == 'Maryland') |> arrange(desc(residuals)) |> select(Date, Team, Opponent, W_L, differential, FGPctMargin, predicted, residuals)# A tibble: 301 × 8

Date Team Opponent W_L differential FGPctMargin predicted residuals

<date> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

1 2024-11-19 Maryl… Canisius W 71 0.287 36.0 35.0

2 2016-11-17 Maryl… St. Mar… W 48 0.115 14.7 33.3

3 2024-11-08 Maryl… Mount S… W 34 0.0260 3.76 30.2

4 2023-01-28 Maryl… Nebraska W 19 -0.087 -10.2 29.2

5 2023-12-12 Maryl… Alcorn … W 40 0.085 11.0 29.0

6 2024-12-17 Maryl… Saint F… W 54 0.211 26.6 27.4

7 2022-11-25 Maryl… Coppin … W 16 -0.0640 -7.35 23.3

8 2018-12-11 Maryl… Loyola … W 23 0.0100 1.79 21.2

9 2024-12-21 Maryl… Syracuse W 27 0.048 6.48 20.5

10 2024-01-27 Maryl… Nebraska W 22 0.0110 1.91 20.1

# ℹ 291 more rowsSo looking at this table, what you see here are the teams who scored more than their FG percentage margin would indicate. One of the predicted values should jump off the page at you.

Look at that Maryland-Nebraska game from 2023. The Huskers shot better than the Terps, and the model predicted Nebraska would win by 10 points. Instead, Maryland won by 19!

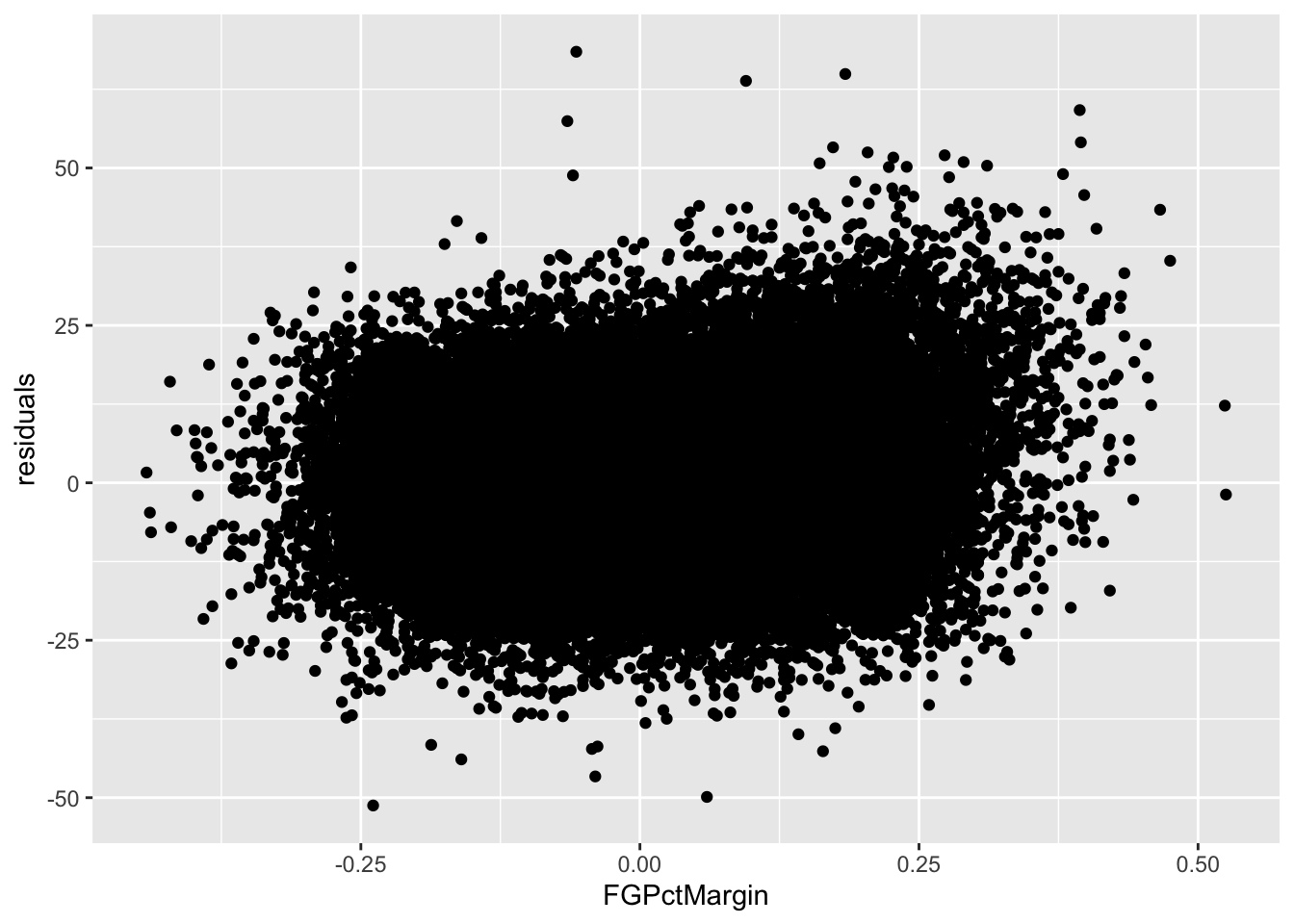

But, before we can bestow any validity on this model, we need to see if this linear model is appropriate. We’ve done that some looking at our p-values and R-squared values. But one more check is to look at the residuals themselves. We do that by plotting the residuals with the predictor. We’ll get into plotting soon, but for now just seeing it is enough.

The lack of a shape here – the seemingly random nature – is a good sign that a linear model works for our data. If there was a pattern, that would indicate something else was going on in our data and we needed a different model.

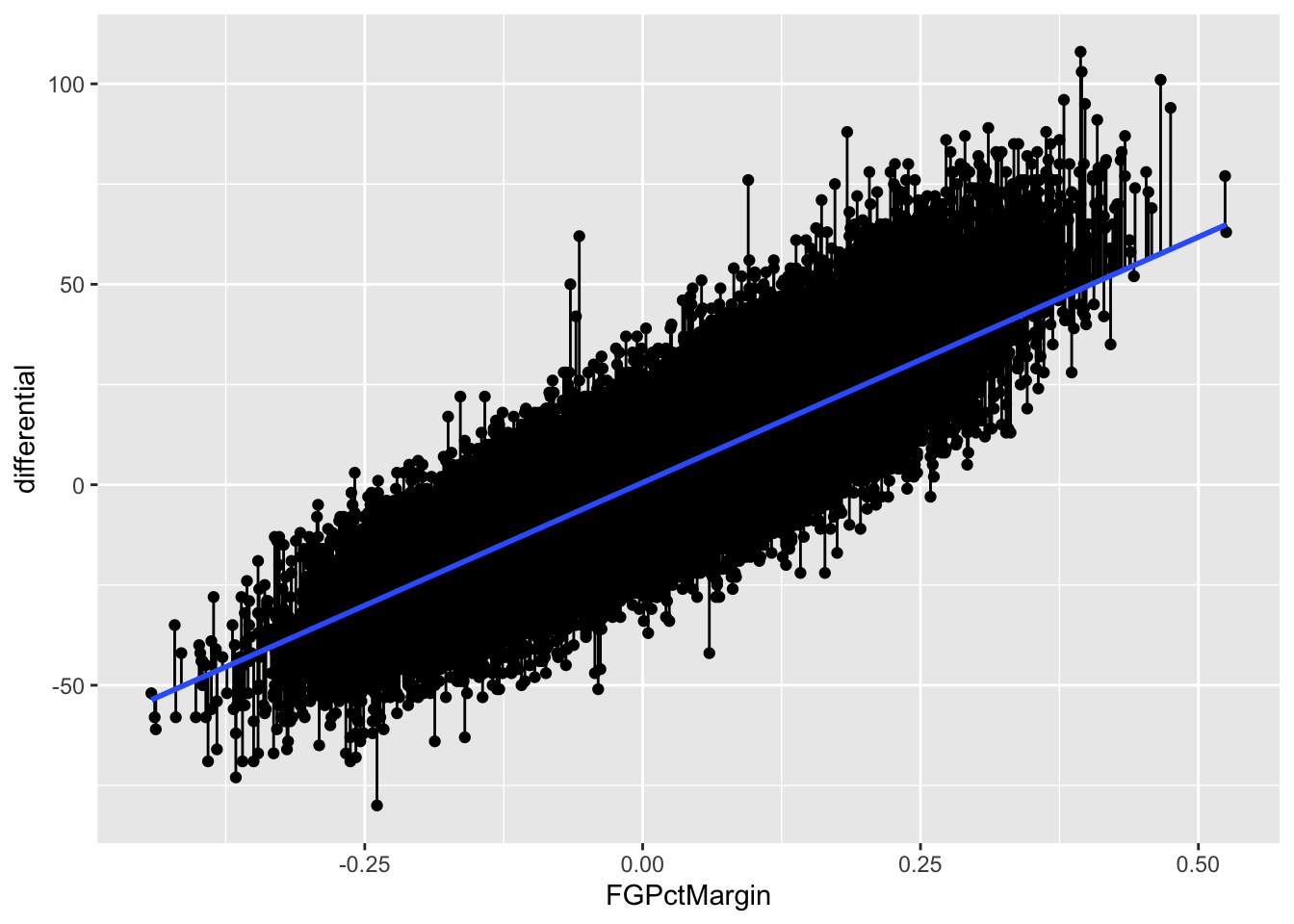

Another way to view your residuals is by connecting the predicted value with the actual value.

`geom_smooth()` using formula = 'y ~ x'

The blue line here separates underperformers from overperformers.

11.1 Fouls

Now let’s look at it where it doesn’t work as well: the total number of fouls

fouls <- logs |>

mutate(

differential = TeamScore - OpponentScore,

TotalFouls = TeamPersonalFouls+OpponentPersonalFouls

)pfit <- lm(differential ~ TotalFouls, data = fouls)

summary(pfit)

Call:

lm(formula = differential ~ TotalFouls, data = fouls)

Residuals:

Min 1Q Median 3Q Max

-81.081 -10.645 -0.129 9.596 106.596

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.143416 0.248854 16.65 <2e-16 ***

TotalFouls -0.080580 0.006875 -11.72 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 16.72 on 110063 degrees of freedom

(5 observations deleted due to missingness)

Multiple R-squared: 0.001246, Adjusted R-squared: 0.001237

F-statistic: 137.4 on 1 and 110063 DF, p-value: < 2.2e-16So from top to bottom:

- Our min and max go from -81 to positive 107

- Our adjusted R-squared is … 0.001237. Not much at all.

- Our p-value is … is less than than .05, so that’s something.

So what we can say about this model is that it’s statistically significant, but doesn’t really explain much. It’s not meaningless, but on its own the total number of fouls doesn’t go very far in explaining the point differential. Normally, we’d stop right here – why bother going forward with a predictive model that isn’t terribly predictive? But let’s do it anyway. Oh, and see that “(5 observations deleted due to missingness)” bit? That means we need to lose some incomplete data again.

fouls <- fouls |> filter(!is.na(TotalFouls))

fouls$predicted <- predict(pfit)

fouls$residuals <- residuals(pfit)fouls |> arrange(desc(residuals)) |> select(Team, Opponent, W_L, TeamScore, OpponentScore, TotalFouls, residuals) |> filter(!is.na(Opponent))# A tibble: 109,029 × 7

Team Opponent W_L TeamScore OpponentScore TotalFouls residuals

<chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

1 Bryant Thomas … W 147 39 34 107.

2 McNeese State Dallas … W 140 37 33 102.

3 Appalachian State Toccoa … W 135 34 35 99.7

4 James Madison Carlow W 135 40 29 93.2

5 Lamar Howard … W 121 32 35 87.7

6 Georgia Southern Carver … W 139 51 38 86.9

7 Youngstown State Francis… W 134 46 35 86.7

8 North Carolina C… St. And… W 127 40 32 85.4

9 Sam Houston State Southwe… W 120 33 22 84.6

10 Tennessee-Martin Champio… W 115 29 28 84.1

# ℹ 109,019 more rowsFirst, note all of the biggest misses here are all blowout games. The worst games of the season, the worst being Bryant vs. Thomas. The model missed that differential by … 107 points. The margin of victory? 108 points. In other words, this model is not great! But let’s look at it anyway.

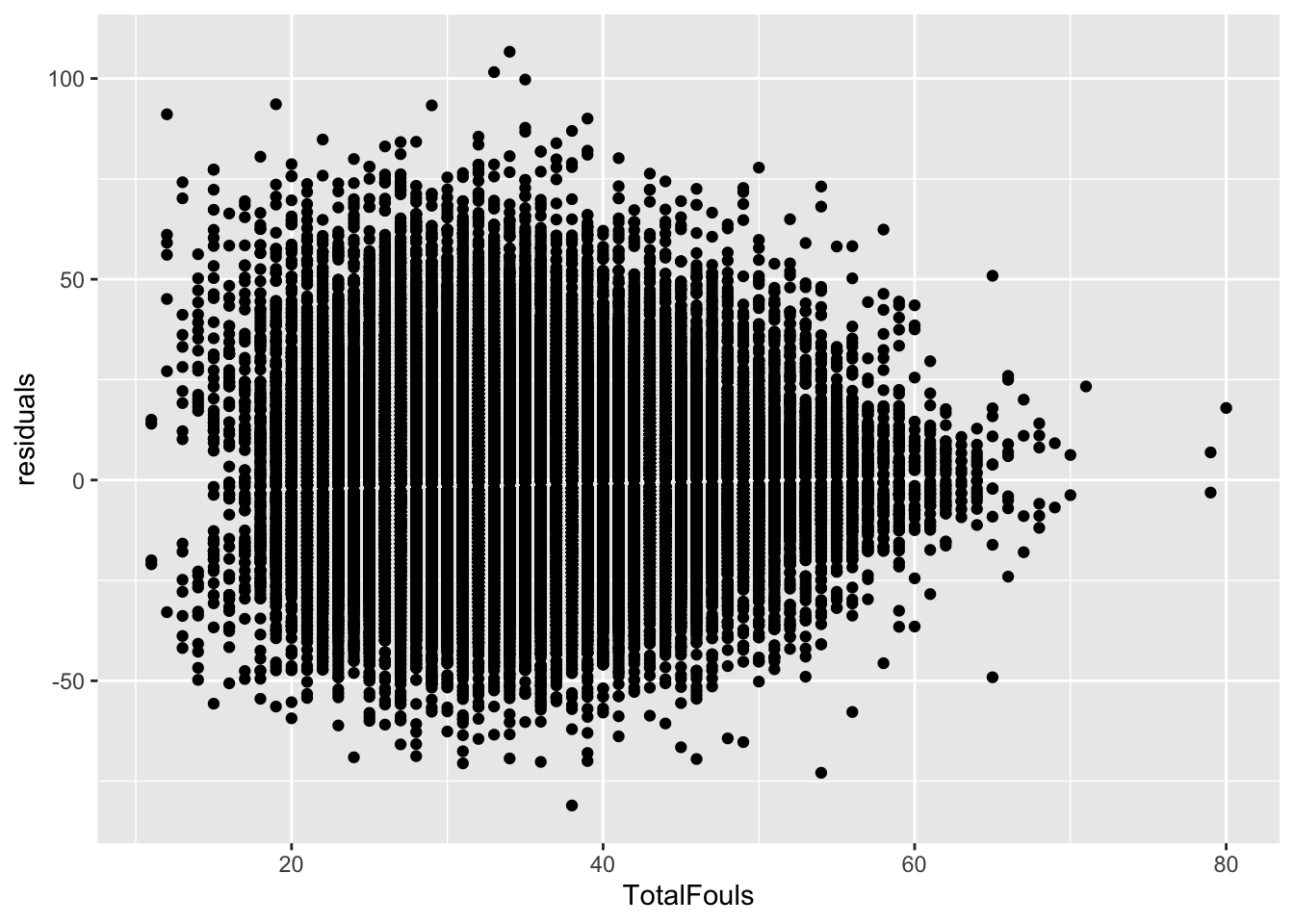

Well … it actually says that a linear model is appropriate. Which an important lesson – just because your residual plot says a linear model works here, that doesn’t say your linear model is good. There are other measures for that, and you need to use them.

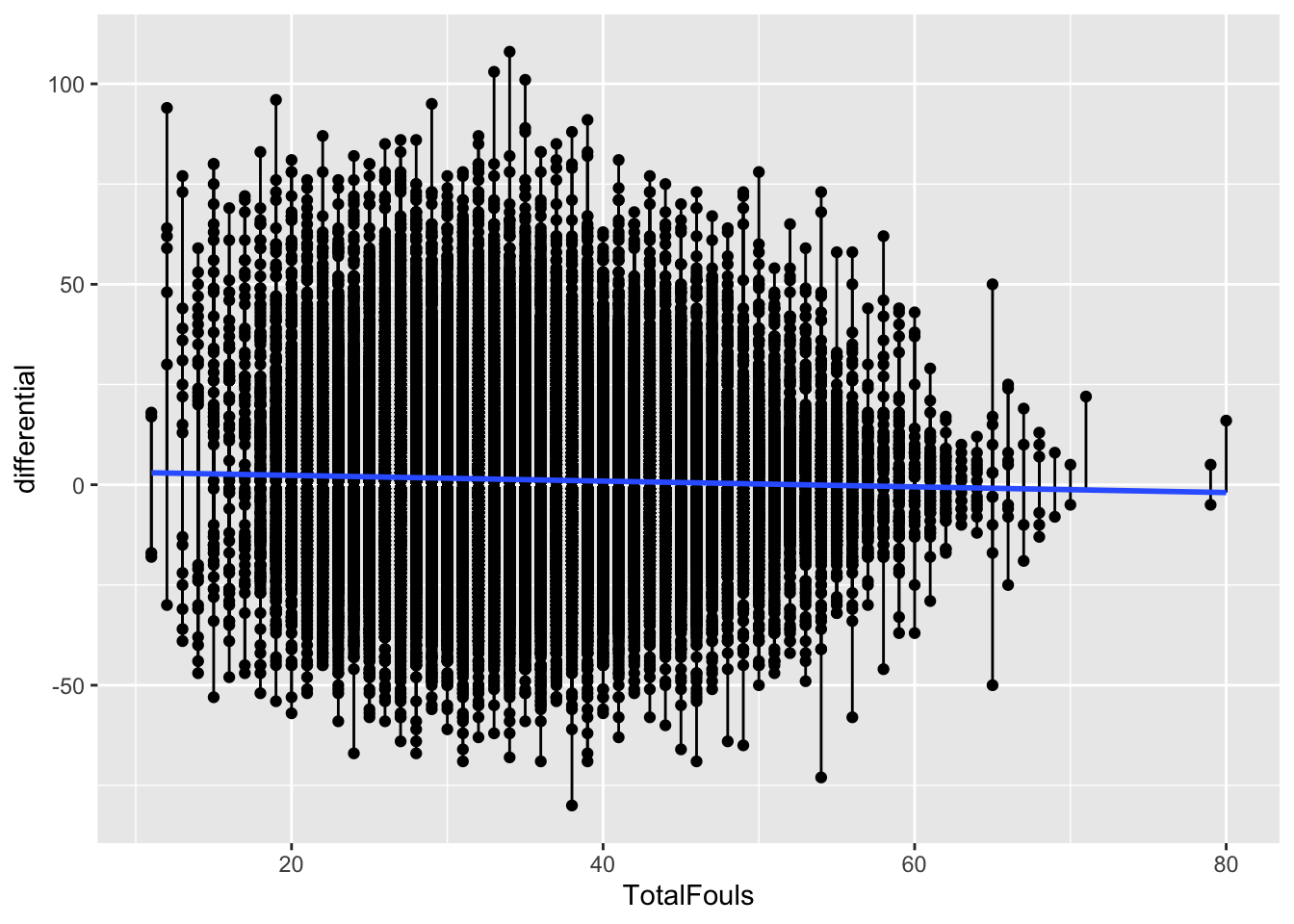

Here’s the segment plot of residuals – you’ll see some really long lines. That’s a bad sign. Another bad sign? A flat fit line. It means there’s no relationship between these two things. Which we already know.

`geom_smooth()` using formula = 'y ~ x'